BLOG

May 24th, 2013

Illegal downloads and piracy are better for Music than legal streaming

I present here my reflection both as a music consumer and a musician. One fundamental question is the following: did our experience with music improve or deteriorate from the new means of music distribution over the past decade ? The first answer comes to mind: we have never had such easy access to so much music, nearly instantly; an internet connection is enough for a person in a less developed country to access music that was unreachable just a few years ago. The music streaming model is the latest stage in an inevitable evolution towards server- and subscription-based content: access everything anywhere anytime without "carrying" it. The elimination of the ownership is not neutral. Owning a physical object is part of our relationship with a piece of art; as human beings, touching what we love is one of our fundamental senses and the internet and its dematerialisation is interestingly showing its limits in this respect. A positive result is the relative good state of live music performances which still attract important crowds and correspond to our search for the unique music experience.

There have been a lot of government actions against internet piracy, one officially claimed objective being to defend the creators' rights to earn a living from what they create. Paradoxically we are now seeing a rapid expansion of streaming services which have never made artists so poor (one needs thousands of stream plays on Spotify or Deezer just to buy a glass of whisky!). If this is the way to go and ultimately replaces music downloads, artists will have no more revenues at all from digital music while the physical CD distribution continues to shrink at the same time. On the other hand, service providers and major labels are profiting from the aggregation of the revenues generated from the streams: the aggregators are kings, the artists get the little remains.

Is the music consumer really a winner in this evolution ? Does access to more content really bring quality and improve the listening experience ? My personal opinion is a definite no. While streaming services like Spotify are a definitely good discovery tool, I never found listening to streamed music satisfactory. It would never equate the detailed listening from a CD, the better audio quality of the latter with no compression being a factor. As our lifestyle also became more "nomad" and we mostly listen to music on the run from iPods and other mp3 players, I still find it more satisfactory to build my own music library from carefully chosen albums rather than having a service automatically feeding me with an infinite choice of songs. This is of course a very subjective view but I actually recently re-discovered how much better it is to listen to music from a CD and I would invite anyone to do the same!

There is one constant in our world: what is rare is precious, what is abundant is diluted and less valuable, if not valueless. This is not just economic theory but a timeless constant in human perception. We put more value on rare moments than repetitive ones etc. The same way, we like to "deserve" what we see or listen. Our artistic appreciation is more intense when we are active in our experience, whether it is going through a painting exhibition or going to a concert or theatre. We are keener to seek a "return" from our effort and obtain more pleasure, in a positive sense. Conversely, streaming services participate in the further discounting of the value of music among other art forms and the reduction of our pleasure to listen to it. The listener can easily scroll through thousands of songs, the music he/she should listen is automatically suggested resulting in a very passive attitude. It is nearly a fast food equivalent of music: you don't even have time to finish a meal that you are invited to consume another one. This new legal music consuming model is for me worse than music piracy. Music piracy has the benefit of inviting an active attitude towards music because part of its essence relies in the cat-and-mouse relationship between the pirates and the authorities. The content rarely stays safely in the same place. It is the virtual extension of the old time underground traffic of bootleg tapes. Those who make the effort of going and chasing the music content are usually music passionates. Some studies even showed that most of them tend to buy a portion of the music they download, whether it is because they want to reduce their guilt or because they are happy of the albums they were able to preview for free. I would unsafely bet that, on average, a person downloading a lot of music illegally would ultimately buy more legal music than a person who doesn't pirate.

Therefore, as a musician, I very much prefer seeing my music pirated rather than participate in a server-based music distribution model that is just further discounting the value of music and also deteriorates our relationship with art in general. We have not seen yet another similar system where, say, for £5 a month you can drink as much beer as you want from any London pub! (sure there is the argument that it technically costs nothing to duplicate an audio file but this justification is not sufficient to my sense, which I shall discuss later). If music streaming services imposed a limit on the number of listens for each song, then they would fulfil a legitimate and much needed discovery tool that would participate in a virtuous circle of the music distribution.

There is no point in praising, regretting or complaining about the current evolution. It is just an inevitable result of the technological advancements. The internet allowed independent musicians like me to publish their music without seeking support from the labels. Access to music is overall much more democratic. However, streaming-based services present an illusion of progress. Assessing its impact in a cold-headed fashion, admitting that any technological evolution is not synonymous with artistic and social progress, will allow to search for new directions and innovations that may ultimately benefit both artists and consumers.

March 28th, 2013

Composing music in a world of musical overabundance

Making music naturally leads to reflect on how we listen to music today and understand people's evolution towards it. This goes from those carefully listening to full albums in their living room, those using it as a background while they work or do some sport, those randomly shuffling across thousands of songs (legally acquired or not!) on their iPods, to those only listening to music through ringtones. Music has a different utility to each person and is connected to a context and situation. While the musician has control on the music making process, the way people consume the music is completely out of his control and belongs to the listeners. Although music consumption habits vary greatly, the general trend is that, because multimedias have become so omnipresent in both our professional and personal lives, because music is more accessible and much cheaper, we are fed with much more music than before, the same way we are fed with much more news stories. The human brain has natural trouble absorbing all this information in such short a time. The next news item overrides the previous one with no time to anchor and digest it. It goes nearly the same way with music. Understanding a piece of music requires repeated listening, but why bother when thousands of other songs just wait to be heard in just a click ? There is a correlation between the increasing abundance of musical content and our attention span to music. As consumers, we risk being trapped into the same attitude as radio programmers, listening to the first few seconds and skipping to the next if nothing obviously catchy is heard.

At the same time, the format in which music is published is influenced by its media supports. The hit song format came from the physical constraints of the 78-RPM and later 7" records. Albums were limited by the 44 minutes of the 33-RPM LP records and got slightly longer (up to 74 mn) with the CD format. Recent studies showed that people now tend to buy more individual songs than albums online and the music industry is naturally influenced by this behaviour. For commercially-oriented artists, it may therefore not be worth investing in producing a full length album. We see more and more of them releasing singles, EPs, or albums that look more like collections of singles. Although understandable, I find this unfortunate, both as a musician and a music consumer. This is getting worse with the growth of music streaming services such as Spotify, more on that in a next article.

In this context, composing a full-length album has become quite a different and much more deliberate act. My passion for music comes in good part from listening to albums, from appreciating the coherence of their structures and sound elements. Pink Floyd's Dark Side of the Moon would not have reached its cult status if its songs had been published in isolation. Dvorak's Symphony of the New World would not have its dramatic impact if its movements were split out of their context. Oum Kalthoum's vocal virtuosity would not have reached perfection without the long and epic time format of classical arabic music improvisation. These are of course just examples among many that formed my music appreciation across different genres, and which made me keen to compose albums rather than single songs. Arranging the order of succession of the tracks is like ordering the chapters of a book. Durable emotion and impact need time to develop and peak - a three-minute single song will not create the lasting attachment that a full coherent album can bring. Progression and contrasts are fundamental elements of music, an album or a classical symphony is more able to survive the test of time when it is able to tell a story and immerse the listener. Taking the cinematic analogy, one would not have the idea of splitting and selling a feature-length film into its different scenes.

When I uploaded my latest 13-track album Rise & Fall on Soundcloud after more than a year of work, I realized again that it was just a tiny element in a giant continuous stream of sound data. It would quickly be moved down the stack as other sounds get uploaded by others. I can see some independent artists being influenced, if not slaved, by this technological trick and publish tracks every week or so in order to stay up to speed with the stream, worried of losing an already volatile audience. That seems a mistake to me. It makes musicians trying to obey a pace that is unnatural to music making and art in general. It makes them fall in the trap of some web companies who want to encourage this attitude just to generate more traffic on their sites. The same way the internet has not changed the fact that it still takes nine months to give birth to a child, it has not changed the fact that it still takes time and pain to compose and produce an album. This permanent fact is at least reassuring!

March 26th, 2013

The Sound - base waveforms

- The sine wave: a sound made of one single frequency with no overtones. It is the simplest type of waveform, and the "atom" of all sounds, i.e. any sound is a complex combination of sine waves.

- The triangular wave: although richer, a triangle wave is not so far from a sine wave as it does not contain many overtones. The Fourier decomposition below shows that it only contains odd multiples of the fundamental and the amplitude of the overtones quickly decreases hence quickly becomes negligible.

- The square wave: also made of odd multiples of the fundamental frequency, the overtone amplitude however decreases more slowly hence the sound is richer. Its closest acoustic equivalent is the clarinet. It belongs to a larger class of waveforms called pulse waves.

- The sawtooth wave: among the present waveforms, it is the richest as it contains all harmonics. Because of its richness it is a frequent starting point to create rich pads, brassy sounds or fat bass sounds.

For reference and at the risk of scaring some readers, I am writing the corresponding Fourier decompositions. This is just to show that, despite the fact that the signals have simple geometric forms, they are still complex summations of sine waves. Note also how the amplitude of the overtones decreases across the successive harmonic frequencies.

\begin{equation} Sine(f_0, t) = \sin\left(2\pi f_0 t\right) \end{equation} \begin{equation} Triangle(f_0, t) = \sum_{n=0}^{+\infty} \frac{(-1)^n}{(2n + 1)^2}\cdot\sin\left(2\pi (2n + 1) f_0 t\right) \end{equation} \begin{equation} Square(f_0, t) = \sum_{n=0}^{+\infty} \frac{1}{2n + 1}\cdot\sin\left(2\pi (2n + 1) f_0 t\right) \end{equation} \begin{equation} Sawtooth(f_0, t) = \sum_{n=1}^{+\infty} \frac{(-1)^n}{n}\cdot\sin\left(2\pi n f_0 t\right) \end{equation}

February 25th, 2013

The Sound - some basics

What's in a sound ? What makes a sound richer or more complex than another ?

When we perceive music, our ear is first sensitive to the timbre of a sound before appreciating its organisation. Like in a film, we first appreciate the setting before entering the story.

Physically and basically, a sound is the result of vibration, and can be carried by different vehicles such as air, water, wood, metals etc. A soundwave vibrates at a frequency that is going to determine its pitch (i.e. how we perceive the sound to be low or high). A sound is a superposition or spectrum of different soundwaves which are going to define its timbre. This spectrum is for example what makes us differentiate between the sounds of a piano, a violin, a trumpet etc...even though these instruments may play the same notes when we first hear them.

The audible sounds lie in the frequencies between 20 Hz and 20 kHz (the standard A4 note in western music is usually set at 440 Hz). Think of a frequency as the time a wave takes to cycle back to its root position on a string. That's why violins have different sizes: a double bass needs longer strings than a cello or viola in order to generate lower sounds or frequencies as it will make the wave travel longer in order to do a full cycle across the string.

The simplest sound is therefore made of a soundwave oscillating at a single frequency. Such pure sound, or sinewave can not be found in nature but can be generated electronically. Actually, one of the oldest electronic instruments, called the Theremin, generates a sound close to such purity. I will write about this fascinating instrument in some later article.

A more complex sound will be made of several soundwaves oscillating at different frequencies and amplitudes. For example when you listen to one note from a guitar, you can get the impression of hearing some other notes or harmonies in the background, which makes the sound richer. It is actually these additional frequencies that you hear. Each frequency from the spectrum will have its own amplitude (loudness) which also affects the final timbre.

A sound can be more technically decomposed into a spectrum of oscillating frequencies according to the Fourier decomposition. Although this will ring a bell to the mathematically-trained brain, I will not go further into the maths at this stage.

\begin{equation} s(t) = \sum_{i=1}^{+\infty} a_i\cdot\sin\left(2\pi f_i t + \varphi_i\right) \end{equation} The present audio sample gives a demonstration of successively a pure sinewave, a superposition of two and three sinewaves oscillating at different frequencies but same amplitude. These are pretty uninteresting sounds but you may notice that the last sound already evokes some electronic organ; this is normal as organ sounds are created using additive synthesis. The picture shows a snapshot of the simple patch I created to generate those sounds using the Moog Modular V. More in the next articles...

January 27th, 2013

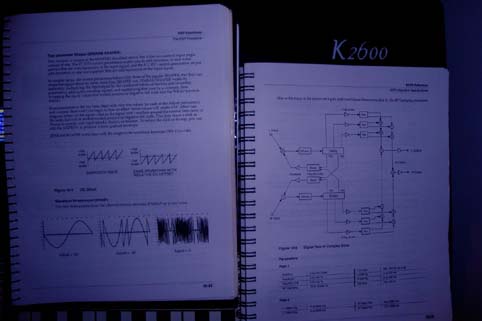

Inside the Studio: the Kurzweil K2600 synthesizer

This is a first article introducing the instruments of my studio. It is natural to start with the first synthesizer I ever used, the Kurzweil K2600 and on which I learnt all the aspects of sound design. It is still at the centre of all my composition work.

First some little background about his inventor, Ray Kurzweil, a fascinating character. Back in the 70s, he created one of the first speech recognition devices and reading machines for the blinds. Stevie Wonder asked his help to develop an electronic instrument able to reproduce the natural sound of acoustic instruments. Hence the birth of Kurzweil Music Systems in 1982 and their first synthesizer model, the K250, released in 1984. Although Kurzweil left the company in 1990, later models continued to be developed by a much respected team of engineers, with an impressive range of synthesis algorithms. A lot about his biography, conferences and audacious predictions can be found on the internet, starting here so I will not expand more for now.

At the time I purchased the K2600, I was still far from doing my own compositions, having learnt to play the piano pretty late. But I knew that if I ever wanted to do it one day, I needed to acquire a professional synthesizer with which I could have an emotional attachment like a guitarist can have with his guitar. The K2600 fulfilled my wishes. Its sounds - both electronic and acoustic samples - had more warmth to my taste than other synths, and proved to me that the reproach of coldness often heard towards electronic instruments was totally unfair. Its semi-weighted keyboard has a very natural touch and the pressure sensitivity can be parameterised at a very precise level. It gives a good balance between the physical contact of a piano and the lighter touch needed to play a wider range of music styles. It is a full "workstation": a keyboard, a synthesizer, a sampler, an effect processor and a sequencer. I will expand on these different features in a next article. Two families of sounds struck me in the first place: the organ sounds, not based on captured samples but fully recreated using its own sound generator and emulator; one can create from a very realistic pipe or tonewheel organ to a much more alien-sounding synth organ. Expect some of these in next albums! Its dark string sound is one of my favourites and I already used it in various modified forms in my albums. It definitely has a characteristic and recognizable timbre, ideal to create threatening atmospheres. Depending how I modify the original sound, I can make it sound either acoustic or electronic and ethereal, which is what a synthesizer is for: not to try to imitate a real ensemble but rather to open doors to totally new soundscapes.

What is impressive is that the K2600 still has the old but efficient way of processing electronic memory. For example, among the synths of this period, it probably has the most naturally sounding piano samples, yet they are only stored on 8 megabytes. In a period when chip memory was still expensive, engineers needed to be particularly ingenious in their programming, whereas today's instrument sample libraries are stored on hundreds of gigabytes. Moreover, Kurzweil Music manufacture their own chips and that clearly reflects positively on the quality of their sounds.

I have always been interested in the history of electronic instruments manufacture. It is not so different from the manufacture of acoustic ones: it comes to science and physics serving art, a bridge between engineering and music. The engineers are often themselves musicians and this is definitely detected when digging into their conception.

Another Kurzweil specificity is its so-called Variable Architecture Synthesis Technology (VAST) which allows to build infinite (vast!) combinations of filters and effects. The signal paths can be programmed by the musician in a modular fashion. As my first synth it was not the easiest entry point but its complex architecture and detailed technical handbook allowed me to decompose and understand the sounds to their roots.

Going back to the root of a sound, its nuclear level, from its physics to its place in the music is one of the drivers of my musical research... going deeper into the crater. And that is what I will try to share in a long series of articles in this blog.

January 7th, 2013

Music making: mixing the sounds together

|

| A portion snapshot of the mixing console during the track "Rise & Fall - Overture" |

The mixing of a song is the process of balancing the volumes of the instruments and sounds together to combine them into one unique audio piece. It is a fundamental part of the production process and dramatically determines the perspective of the music. Similar to making a good meal, the ingredients need to be correctly dosed to taste good. The more sounds there are, the more complex it gets.

It starts in early stage of the recording as one needs to have a reasonable initial mix to further develop and orchestrate a composition. I usually have a good idea of which classes of sounds will dominate the final track. These are usually recorded first to create the backbone, even though the exact sound design may not be completed yet. When the song has a drum sequence, it is also initially recorded to give the beat.

There exist some typical mixing patterns for mainstream music styles. For example, if the song contains vocals, one will usually set the main vocal part to dominate the others; for a metal song, the drum and guitar parts will need to be highly placed in the mix etc. For well-defined styles, one can then find good documentation and advice on typical mixing settings. Major artists on the other hand usually hire dedicated mixing engineers, who provide sophisticated technical skills and a well-trained ear to make their songs optimized.

One of my interests is actually to depart from the traditional sound distribution and rather to give space to more unusual elements, which I intend to enforce more in my new works. Additional and accidental sounds are important to give "spice" to a track. Without lyrics, the music still has to make a statement and the trick is to find a good balance between the core sounds and the ones that bring nuance and contrast. In the absence of vocals, the ear is in particular much more sensible to the texture, space arrangement and distribution of the sounds, as it shifts its focus from a narrative to a more "impressionist" listening. Care must be taken to when a sound appears and disappears. It is even more the case if the song is not melodic as there is no melody line to support the listening, hence becoming much more abstract.

The mix can also be massively impacted by the effects added on the sounds (e.g. reverberation, chorus, flanger etc) but those will be discussed in a later article. Panning (e.g. distribution between left and right channels in stereo) is also part of the mixing process.

The music also sounds differently depending on the device used. I mostly work out the mix on the studio speakers as this is where I can better assess if the sounds are correctly balanced across the bass, middle and higher frequency ranges. I then check the result through headphones where some of the sounds may be flattened while sometimes more details may be heard. My rule of thumb is that if a mix sounds good on studio monitors it will sound good through a headset (although differently). This is however distinct from having the music correctly rendered on all devices (computer, iPod, radio, hi-fi, low-fi systems etc) which is worked through the mastering process and will be discussed later.

I may not go as far as saying, like some extreme views, that mixing is sometimes more important than the music, but bad mixing can definitely destroy it all and a good mix can make the difference. It is for me an integral and ongoing part of the composition and recording process.

November 30th, 2012

RISE & FALL - A Prelude before the album release on 12-12-12...

"Can We Escape Our Inevitable Destiny ?"

Rise & Fall is a crepuscular tale about the cycles of life and civilizations - past, present and future. As the world painfully (but surely) transits into another phase, I found interesting to give a musical reflection of this very idea and fear of the ultimate collapse, imminent or not. This apprehension is universal and timeless, it has crossed all ages since the first civilizations. It points to the fundamental question of our existence, what will survive us and what will be transmitted through the next generations, both as individuals and as a society. Demography, intellectual production, technological advancement, artistic creation are some of the vectors of such transmission. Our ephemeral nature is source of a constant renewal. I will develop more later, so here I am just giving a very brief overview of the musical concept.

The theme naturally led me to use a more orchestral approach. However, I wanted the orchestral tonalities not to sound too acoustic so most of them are processed through electronic effects or mixed with electronic sounds. I always find interesting the ambiguity of a sound being neither acoustic nor electronic, as in this context it reflects a feeling of uncertainty and insecurity, and it also serves as an attempt to erase the "time print" of the music.

I have re-used very little of the sounds from the previous albums as the concept required to start from fresh grounds. The composition, arrangement and recording stages were also sequenced differently as I had pretty much composed all tracks before starting the actual recording. I indeed wanted the themes to be more developed and hence I reserved a longer preliminary phase for composing and writing before entering the production stage. I also added some transitions to link some of the tracks together. From the beginning, this album was conceived as one piece where each movement would flow into the other in a unified manner.

Some aspects of sound design that are present will also become more prominent in forthcoming albums. Producing a rather developed melody and orchestration while at the same time using deep sound modulations or effects is tricky, and often one has to be sacrificed against the other. The more instruments or sound components there are, the more difficult it is to include an unusual sound design or effect that stands out of the music as it would lack the necessary space to evolve. A rougher sound design-oriented approach is already in the works for a coming album, as soon as time and inspiration allow...

March 19th, 2012

Starting a new album

A new album starts for me with a story, moods and colors, similar to writing a script and then coming up with the themes and sound textures that will

illustrate it. In a sense it is like composing a soundtrack without the picture. An album is not a collection of random tracks. It needs to have

coherence.

A new album starts for me with a story, moods and colors, similar to writing a script and then coming up with the themes and sound textures that will

illustrate it. In a sense it is like composing a soundtrack without the picture. An album is not a collection of random tracks. It needs to have

coherence.When starting a new composition, the first difficulty is to determine the concept and the sound material. While acoustic instruments provide a relatively well defined perimeter of sound timbres and textures, the boundaries are much less clear with electronic instruments (synthesizers and/or samplers). Their illusion of freedom and limitless possibilities can easily be disorientating (I will go back to the different types of instruments in a later article).

The musician has to define his own perimeter. Choice is part of the art. Defining the concept is key to get a direction and minimum structure. Some discipline then needs to be maintained in order not to deviate from it, while keeping enough room for spontaneity and the unexpected. Actually having a precise concept allows improvisations and surprising findings to come in a more natural manner as they will usually fit inside some "tolerance limits". The ideas that fall outside the scope can be saved for later projects.

So the approach is to start with two layers of limitations: one that imposes a certain structure (thematic, sound design) and one that limits the scope of other more accidental elements.

I like the project to imply technical challenges. It should not remain within a comfort zone. I like to learn new approaches from a concept, seeking to expand a sound palette. There is therefore a "danger zone" at a beginning of composition. Venturing in new sound territories has no guarantee of success. So there is this interesting period of doubt where nothing is assured, fluctuating between excitement and doubts.

Inspiration is indeed not linear. The notion of inspiration itself is ambiguous, and probably corresponds to some naive perception of the creation process.

It does not exist alone on its own but rather as the outcome of a lot of experimentation. It is more a state where the mind is available to capture the ideas that will be the cornerstone of a creation. You may for example go twice through the same process, playing the same notes, manipulating the same sounds, but if your mind is not in the right state, it will not be able to retain the elements that make the difference and the inspiration opportunity will be lost. It is actually difficult to command the brain to be "inspired"! And often, a very small motif or detail can give birth to a whole new track.

Physical state, time of the day and night also have their influence and for sure there is no rule. Being too awake is good for more mechanical tasks but might not necessarily help for actual composition. Different states may actually result in interesting contrasting results, each having its own valuable contribution. The constant is that any idea is good and must not be thrown away, it is important to keep reference of any that may come up (thematic or soundwise). One day it will find its place in the compositional equation...